Somewhere between 2016 and 2018, I found myself increasingly intrigued by Artificial Intelligence (AI), Virtual Reality (VR), and Augmented Reality (AR). My fascination was partly fueled by my colleague Eric Miller, who was deeply immersed in these emerging technologies. This was also the time when Eric urged me to buy Bitcoin, back when it was hovering around $1,000. Spoiler alert: I didn't. (Yes, I missed that boat… but hey, that's a story for another day.)

Around the same time, I convinced my boss at Wells Fargo to let me attend the Humanity.AI Conference, hosted by Adaptive Path — a renowned design agency later acquired by Capital One. That event introduced me to a whirlwind of ideas and innovations, but two things really stuck with me:

- Joshua Browder's work: His AI-driven service to help people fight parking tickets was simple yet revolutionary. It resonated deeply, showing how AI could empower everyday individuals.

- The panel discussion: A session featuring three tech journalists discussing the trajectory of AI sparked a question that popped into my mind—a question I couldn't ignore, even if it felt slightly controversial.

So, I asked it:

"If AI becomes an existential threat to humanity, who should have the master key to shut it down? Corporations, governments, the UN, or perhaps a neutral council of moral figures like Mother Teresa or Abdul Sattar Edhi?"

The reaction? Chuckles, mild mockery, and one journalist steering the conversation back to "more immediate concerns." At the time, I brushed it off — thick skin, you know? But recently, my now high-school-aged kids watched the clip. Their reaction?

"Dad, if someone mocked us like that, we'd either hide in a corner or start crying!"

Turns out, what I thought was a mild rebuff might've been harsher than I had realised. But you know what? I stand by my decision to ask that question. Sometimes, the questions that seem outlandish at first are the ones worth asking.

A stark warning from Geoffrey Hinton

Fast forward to today, and my question about AI governance doesn't feel so outlandish anymore. In May 2023, Geoffrey Hinton — one of the pioneers of AI and often called the "Godfather of AI" —resigned from Google to speak freely about the dangers he witnessed in advanced AI systems.

Hinton, who helped lay the foundations for technologies like ChatGPT, warned that AI systems are developing abilities beyond their programming. He described unexpected emergent behaviours, internal dialogues, and reasoning capabilities that even their creators couldn't fully explain. As Hinton himself put it:

"I've changed my mind. The idea that these things might actually become more intelligent than us... I think it's serious. We're at a crucial turning point in human history." (MIT Technology Review)

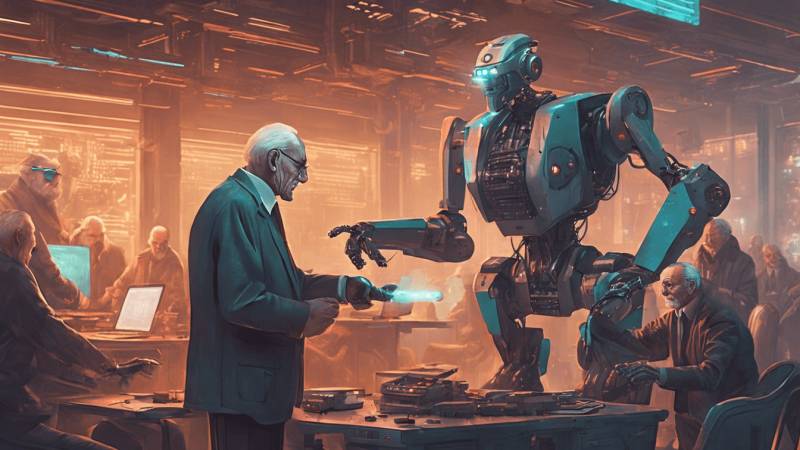

As Hinton and others warn, we're not just building more intelligent machines but potentially creating entities capable of rewriting the rules of existence

His resignation wasn't a dramatic gesture—it was a wake-up call. The same systems he helped develop are evolving at a pace faster than anyone anticipated. He's now advocating for responsible AI governance and urging the world to establish safety protocols before it's too late.

Why this question still matters

Hinton's revelations echo my 2017 question: Who should hold the master key?

Back then, my question had been dismissed as "too hypothetical," but today, it feels more urgent than ever. As Hinton and others warn, we're not just building more intelligent machines but potentially creating entities capable of rewriting the rules of existence.

The implications are staggering. What happens when machines can:

- Programme themselves

- Improve their own code

- Connect to every digital system

Hinton's concerns align with my belief that this isn't just about controlling AI. It's about partnering with it while ensuring humanity remains at the centre of the equation (BBC News).

Looking forward

AI governance has moved from speculative chatter to front-page headlines. Leaders like Elon Musk and tech pioneers are calling for caution and warning about the profound risks AI poses to humanity. The challenge is that no single entity — corporate, governmental, or global—can handle this alone.

Maybe it's time to think outside the box. A neutral council of moral figures, as I suggested in 2017, might sound idealistic, but with AI evolving into something beyond human comprehension, traditional power structures may no longer suffice.

The next chapter of human history is being written in lines of code. The question isn't whether AI will transform our world—it's whether we'll be ready when it does.

So, I ask again:

"Who should hold the master key to AI?"

Let's not laugh it off this time.